Chris Padilla/Blog

My passion project! Posts spanning music, art, software, books, and more. Equal parts journal, sketchbook, mixtape, dev diary, and commonplace book.

- Checkout is a built in GitHub action that will get your current main commit.

- Cypress has their own GitHub action that takes care of setting up your app.

- I had to set

CYPRESS_BASE_URLto ensure that Cypress was looking for the correct endpoint, and not the localhost config that is set up in my cypress.config.js file. - Cypress reccomends using explicit version numbers for consistency and stability.

- You can chose which browser to test with

browser: chrome - I'm passing in my build, start, and cypress commands, though this I believe is also implicit with the Cypress GitHub action.

- Load the landing page

- Get all non-mailto links

- Verify each of those links

- Along the way, exclude any edge cases.

- I'm setting the

baseURLbased on my environment. - I have a list of

excludedLinksdue to some scraping edge cases. (LinkedIn, for example, returns a 999 when a bot visits a page) - The real meat: After I extract the

hrefattributes, I run through each and check the status with theexpectmethod. - Your intake number is random each time.

- Chose your own adventure style interactivity.

- You get hassled if you don't buy a coke!

- Homestarrunner.com was a webshow/game site/really impressive flash developer portfolio, come to think of it!

- Homestuck (then MS Paint Adventures) was a community based-chose-your-own-adventure series (though, I think the community aspect fell off as the series grew)

- Several webcomics used the medium of a web page to hide jokes in the image alt text and even the

mailtolink's subject line!! (Thinking of Dr. McNinja, Nedroid, and Dinosaur Comics to name a few.) - Neopets was a genre of website that was also a game!! You explored the website in the same way you explored a virtual world. Not to mention that it sported its own virtual economy!

Junkyard Jam

Jamming over AC New Murder's Junkyard Jam. 🗑

Still so happy with how the vibe turned out with this track. It sounds like a leather jacket and sunglasses. 😎

By the way, you can —

Debating Stage Names

I'm gearing up to get my music on Spotify! A pretty exciting step!

But I've hit a problem: There are already 3 folks named Chris Padilla on Spotify.

It's not a new problem. In high school, I was one of 5 Chris's that played saxophone.

I'm used to having to mix up my usernames across apps and sites, but this is my whole name we're talking about!

Worth noting: It's not just a musician or an artist concern, it's a professional concern! Even Wes Bos and Scott Tolinski talked about this on Syntax.

I got this advice from Distrokid:

Ideally, if someone already has the name, you should come up with a different name. In the world of actors, for example, no two actors are allowed to have the same name as each other and both belong to SAG (the actors union). That's why Samuel L. Jackson is Samuel L. Jackson, and not Sam Jackson or Samuel Jackson -- those names were taken. If you want to look like a pro, suck it up and come up with a different name if yours is already taken.

Fair enough. So now I'm in that spot where I'm coming up with a name.

The process so far is somewhere between writing down inspirations, aesthetics I like (colors, themes, the like), and also turning to a random name generator to see what sticks.

Benefits of a Stage Name

I've liked being just "Chris Padilla" pretty much everywhere. It's kept things simple and easy for the most part. Even with music, just knowing that it's my solo project and that it can all be pointed back here.

I'm not the only Chris that does this, either!

But.

There are some exciting opportunities with stage names.

First: A stage name can encapsulate a project. Done with a set of ideas and themes? No problem! Change names and move on! Prince did it for more contractual terms, but it still worked for him!

Second: It brings an opportunity for clarity between those projects. A problem I'm starting to have is that when someone asks "What do you do?" The answer is: Software, music writing, saxophone performing, drawing, blogging, teaching. Potentially, the list could continue to grow over a lifetime! As a human being, that all can be contained within one Chris Padilla. But if you want to talk marketing terms, it gets difficult to say "Chris Padilla — Developer, Musician, Doodler, Internet Guy, Baker of Pies..." and so on.

Third: A healthy dose of separation between "The Real You" and "Public You." Derek Sivers says this well. I don't have the pleasure of being big enough for strangers on the internet to criticize me, but should I ever be, it's easier to say "Well, they're talking about Chris D Padilla. Not me, just Chris."

Fourth: There's a certain freedom that comes from a stage name. I always felt that by performing on stage, you had a chance to embody a character. To step into a person, mindset, and mood you might not otherwise channel in your day-to-day life. A stage name is just another layer of that. Online, there's no clear stage. But a name can be like putting on a super-suit in that way.

It's funny how some domains don't have that choice. Authors, speakers, most professional work — it's all on your real name for the most part. But I think there is something to be said about how we are separate from the roles we step into.

(Well drat, but that's not entirely true! R.L Stein, Lemony Snicket, Paul Creston — all Pseudonyms!)

But, Then Again

There's also the benefits of consistency! Unambiguous, less elevated and pretentious feeling, less "webby" (I'm thinking of Twitter handles and gaming tags), more personal, and the benefit of carrying any reputation with you even as you pivot and transition through domains.

Also, maybe this is more of a problem if my name were Chris Martin. There's a really famous Christ Martin already, of course.

And then, on top of that, context matters! If I'm talking about L. Armstrong, you'll know who I mean if I'm in the midst of talking about famous Jazz musicians. You won't confuse Louie Armstrong for Lance Armstrong there.

If we're talking about SEO, a quick search of "Chris Padilla Sax" will pull up my YouTube channel of Sax videos. You won't even be recommended the Wikipedia page for former Under Secretary for International Trade Christopher A. Padilla.

So, maybe it's not as much of a problem.

New Album - Meditations

Reveries on guitar. Improvising with gestures, harmonies, and melodic lines. 🧘♀️💭

What better way to start a new year than with some dreaming?

Cover art by the unbelievably talented Calvin Wong. (Web | Instagram).

🙉 Listen on Spotify — A first for me!!

Making Next.js Links Flexible

It's a little too verbose to use Next's Link component with an anchor tag each time:

<Link href={album.link}>

<a target="_blank" rel="noopener noreferrer">

Support my music by purchasing the album on Bandcamp!

</a>

</Link>There are design reasons for this. For example! It's easier to control opening a link in a new window when the anchor tag is exposed.

BUT we can do better. Here's a HOC that will check if the link is external (includes http) or internal (/music/covers):

import Link from 'next/link';

import React from 'react';

const NextLink = ({ children, ...props }) => {

let newWindowAttr = {};

if (props.href.includes('http')) {

newWindowAttr = {

target: '_blank',

rel: 'noopener noreferrer',

};

}

return (

<Link {...props}>

<a {...newWindowAttr}>{children}</a>

</Link>

);

};

export default NextLink;As a rule on my site, internal links stay in the same window, external links open in a new window. Parsing the url this way does the trick.

Then we can use it like so:

<NextLink href={album.link}>

Support my music by purchasing the album on Bandcamp!

</NextLink>A Love Letter to 2000's YouTube

I had this conversation with my sister Jenn the other day, and this post essentially came out of my mouth over the phone.

Before algorithms, video monetization, and influencers, early Youtube was totally amateur. And I loved it!

Some animators in the industry that are around my age can point to Newgrounds as the place they started messing around with their craft. (Many guests on Creative Block talk about this shared history.)

Some folks in the web development space got their start customizing myspace and tumblr themes (or neopets in my case!)

Not everyone that fooled around here went on to careers related to the website, but for kids, the draw of these webpages was that is was a very open space to experiment. Especially because no one was doing it particularly well!

Youtube was absolutely that for film directors, sketch artists, and memesters.

I would share with you one of my videos, but it's honestly PRETTY EMBARRASSING! Just think early Smosh, but a lot more wholesome.

An amateur space has the same impact that professional sports has on kids. They see people playing soccer at an incredibly high level, but can easily throw a ball in their own backyard and understands the concept of aiming for a net. (This insight actually comes from watching a Game Theory video on eSports from 7 years ago — a really deep nugget!) Creativity is encouraged through less than perfect models.

Especially as a kid! Most of the videos I adored were filmed at their parents' house, in their bedrooms.

It comes back to what I was talking about with Terry Pratchett and real life influences. I was a kid! I had a camera! I had Windows Movie Maker! I could do it all too!

Skip ahead a few years. It all changed for me when Oprah opened a Youtube channel. The environment pivoted from DIY to a marketing machine. Then followed algorithms, monetization, and YouTube eventually becoming the second most visited domain second only to Google.

The other day in a big box grocery store I saw a product named "Influencer starter kit." It's wild to me how industrialized the space has actually become.

All this to say a few things:

The internet is full of highly polished final products. But I'm trying not to sweat being an amateur. It's what was special about that time on YouTube. Many of the people I admire even still take on that spirit!

Those spaces for fooling around are still out there. Discord servers, forums, online groups.

Blogging is a pretty safe arena for this, by the way. You're separated from metrics, it's a bit more of a quiet corner (a morning breakfast table, as Trent Walton puts it.) And it feels like home.

Also, don't ever delete your videos. No matter how cringe!

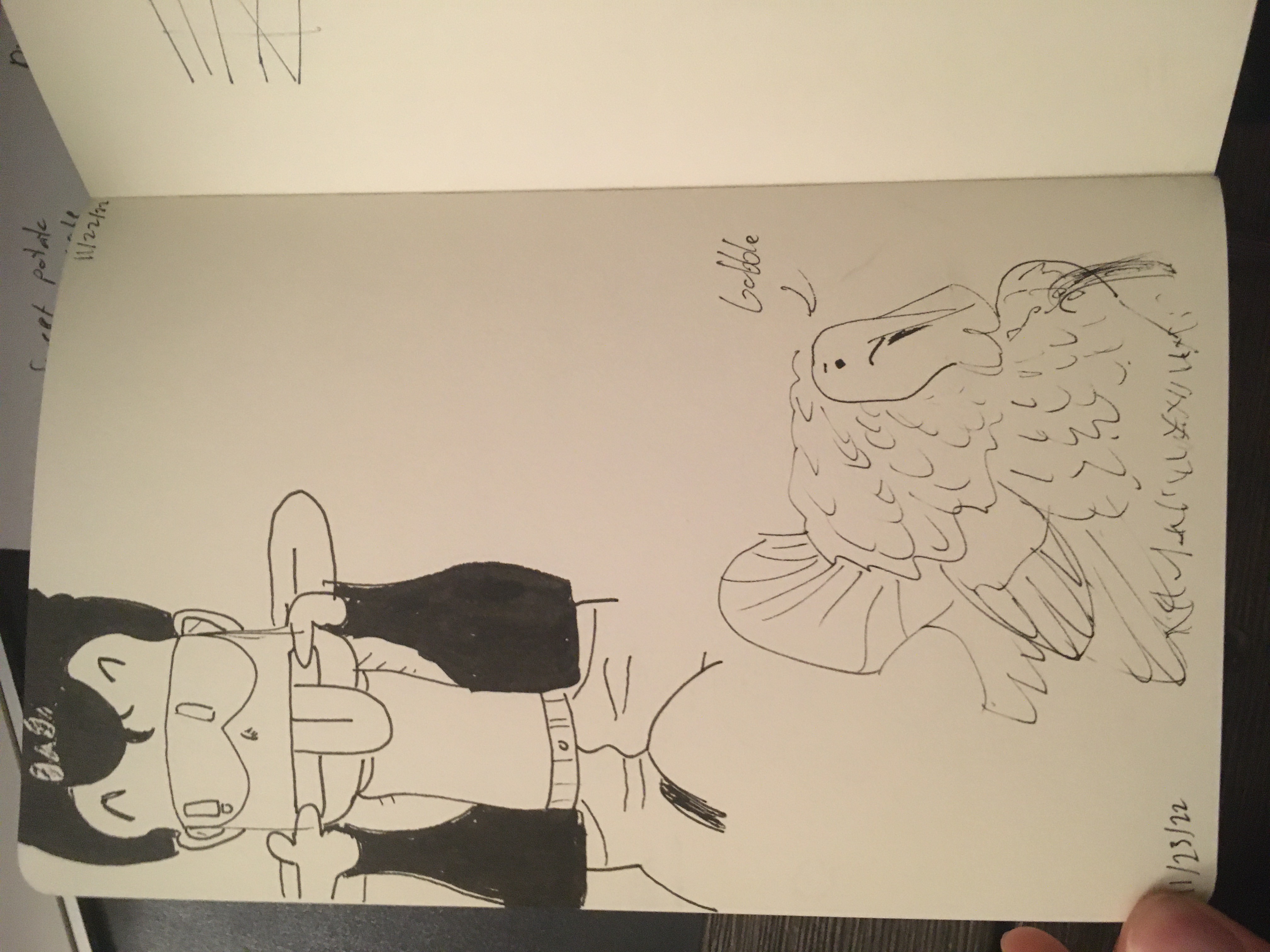

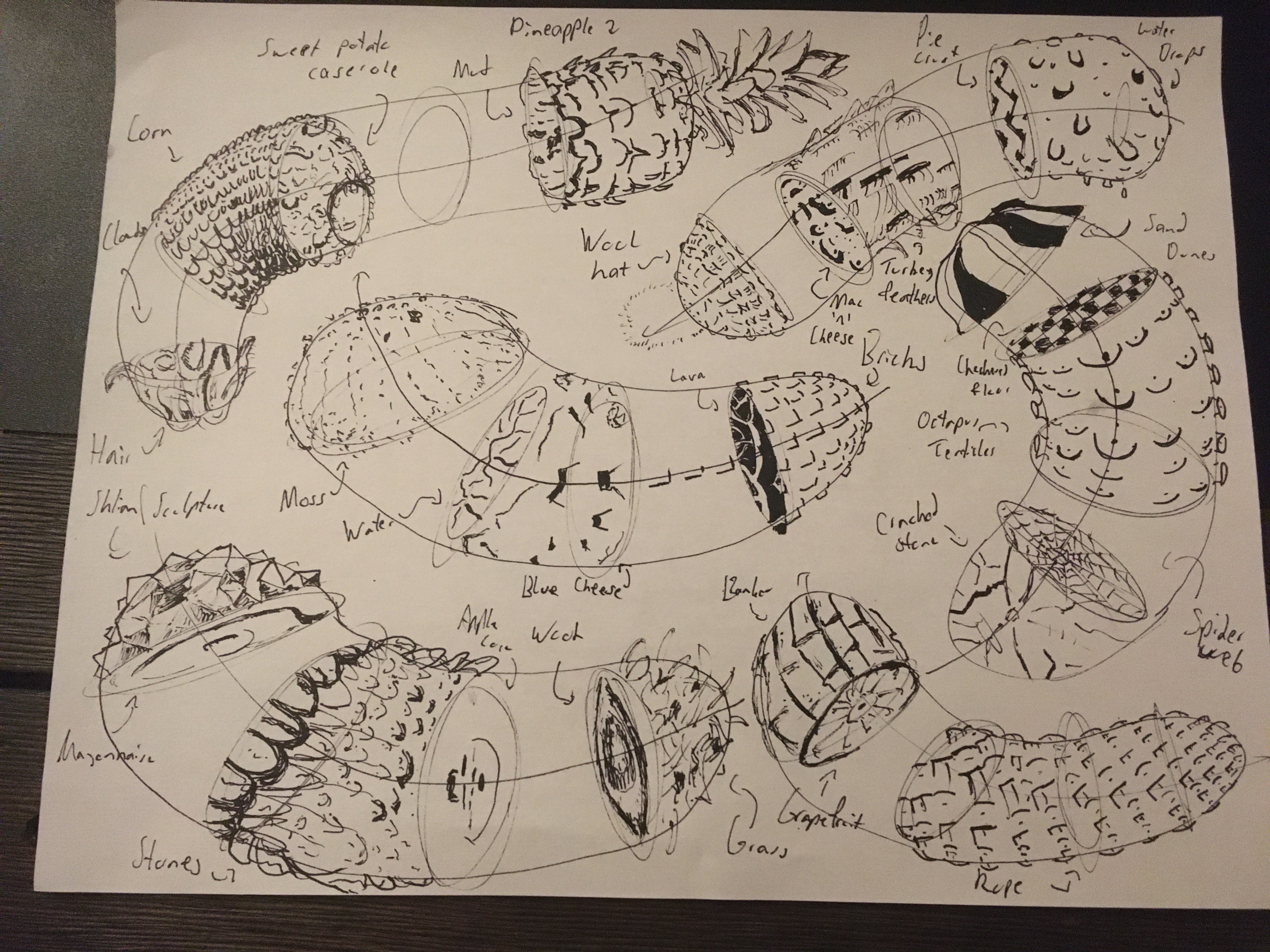

Birds and Texture Sketches

Couple of my favorite birds 🦜🦃

My favorite so far — a study on texture dissections along organic forms. Completed as an asignment for Drawabox.

Major Seven Dreamy Idea

A floaty idea that hasn't quite made it into a full tune yet. 🍃

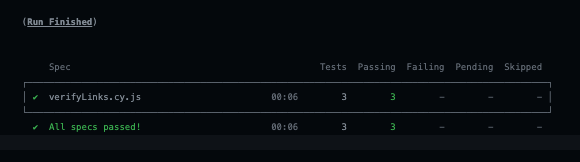

Running Cypress in CI with GitHub Actions

I recently set this site up with Cypress for a few end-to-end tests.

(An aside: last time I referred to it as an End-to-end test, but on further reflection, we're probably looking more at these being integration tests. There's no major user interaction aside from GET requests. But, I digress in semantics. Chris Coyier puts the subtle differences nicely over on his blog.)

Here's a look at a new link verification test running in Cypress locally:

With the tests now giving me the ever so satisfying All Tests Passed notification, it's time to automate this!

Github Actions

There are many CI/CD solutions and approaches. In fact, this site is already using Vercel's Github App to automatically deploy pushes to master.

For simplicity I'm going to keep that in place, and run Cypress with GitHub Actions to automatically run my tests.

Configuration

All that's needed to add an action is to create a config file in .github/workflows.yml at the root of the repo. Here's what mine looks like:

name: Cypress Tests

on: [push]

jobs:

cypress-run:

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v3

# Install NPM dependencies, cache them correctly

# and run all Cypress tests

- name: Cypress run

uses: cypress-io/github-action@v5.0.5 # use the explicit version number

env:

CYPRESS_BASE_URL: ${{ github.event.deployment_status.target_url }}

with:

browser: chrome

build: npm run build

start: npm start

command: npx cypress runStepping through the important parts:

Violà! Tests are now running with each push to master!

Next Steps

As it's currently setup, I'll get an email on failed tests, but Vercel is still picking up the new code and deploying it. Not an ideal solution, but since I'm a small operation here, I'm not in a hurry to add that structure.

But for the day that I do! The next step would be to remove my Vercel GitHub app from the repo. Then, I can add a deploy action as it's outlined in this post on Vercel's repo:

deploy_now:

needs: build

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@master

- name: deploy

uses: actions/zeit-now@master

env:

ZEIT_TOKEN: ${{ secrets.ZEIT_TOKEN }}The environment token can be retrieved by following these steps.

Verify Site Links with Cypress

Here I'm continuing to flesh out my Cypress tests. I've had a few blog pages that have been pushed live, but turn up as 404's due to a formatting issue.

Here, I'm writing a script to verify that all the links on my main page, including blog links, all return a 200 status code.

Writing the Test

Here's the code from last time verifying my RSS Feed:

describe('RSS', () => {

it('Loads the feed', () => {

cy.request(`${baseUrl}/api/feed`).then((res) => {

expect(res.status).to.eq(200);

});

});

});I'm going to continue to use res.status to verify a 200 response. It's simpler than loading up the page.

To run this, I'll need to:

Here it is in action:

import { BASE_URL, BASE_URL_DEV } from '../../lib/constants';

const baseUrl = process.env.NODE_ENV === 'production' ? BASE_URL : BASE_URL_DEV;

const excludedLinks = ['bandcamp', 'linkedin', 'instagram'];

describe('Test Broken Links', () => {

it('Verify navigation across landing page links', () => {

cy.visit(baseUrl);

cy.get("a:not([href*='mailto:'])").each((linkElm) => {

const linkPath = linkElm.attr('href');

const shouldExclude = excludedLinks.reduce((p, c) => {

if (p || linkPath.includes(c)) {

return true;

}

return false;

}, false);

if (linkPath.length > 0 && !shouldExclude) {

cy.request(linkPath).then((res) => {

expect(res.status).to.eq(200);

});

}

});

});

});A couple of lines worth pointing out:

From this, Cypress will run through each link and return results like so:

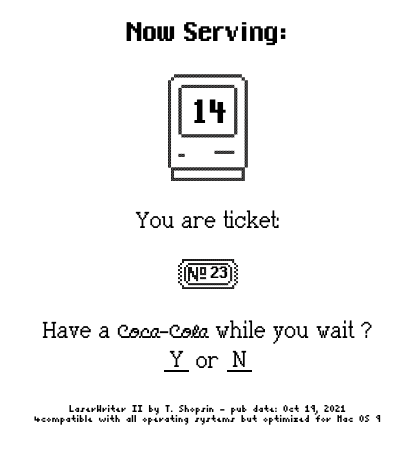

Have a Coke While You Wait?

The other day I was waxing nostalgic for the way websites used the full canvas of web tech to subvert expectations (as one does.)

The website for Tamara Shopsin's LaserWriter II also does a pretty great job at this.

Go take a look before reading ahead!

A few things that made me smile:

And this one is my favorite: You actually have to wait a few seconds before getting to the juicy book details. The website playfully builds anticipation!

On the internet in 2023, that's a pretty big deal! When everything is immediate and served quickly, it's a really special experience to have to sit and wait, not due to loading, but by design.

And best of all, it's not all just for fun and gags! Everything I mentioned above ties in so well with the book. It's referential and matches tone superbly.

Honestly, the website alone sold me on the book. I then loved the book so much that I wrote an album based on it.

A Love Letter to 2000s Websites

Ryan North over at Dinosaur Comics has a fancy new overlay:

So uh have you ever wished you could read Dinosaur Comics but ALSO be reading newly-public-domain Winnie-the-Pooh and Piglet and not-quite-yet-public-domain-but-hopefully-Disney-is-cool-with-it Tigger?? HAVE I GOT AN OVERLAY FOR YOU, MY FRIEND. Thanks to James Thomson for pulling it together!

I remember the first few overlays on the site when they came out! Heck, I remember the contest ran for the custom footers also on Dinosaur Comics dot com!

I just love this sort of thing! Really novel ways of creating unique interactions on the web. Call me an old man (I am 30 now!) but they just don't make them like they used to, do they??

I'll truncate my nostalgia infused griping to these sentence: Social Media apps have made the web more accessible to more people, giving a platform for voices that otherwise didn't have one. Awesome! AND, aesthetically, a minimal approach has overtaken some of the charm from the old internet. Less awesome!

Ok! With that out of my system, here's this — I know that not every website was overlaying Winnie the Pooh on top their comics, I realize I'm remembering selectively.

So let me pivot from complaining to praising some of my favorites:

I think the running thread here is the creative ways folks used the platform of the web. Not just as a way of delivering content the way that Twitter does. But to really squeeze all the juice that's in the web as its own medium.

Put another way, art didn't end at the edge of the canvas.

I'm really happy to have contributed my own piece through AC:NM. A whole visual novel, as a website! Jenn and I very much started the project in this spirit and with inspiration from those older sites. That era of the internet really felt like the wild west where anything was possible.

On to making more quirky sites!

Impression Minus Expression Equals Depression

That equation feels right.

This comes from the legendary Disney animator and teacher Walt Stanchfield (A lead character animator for the Aristocats, to name one.) I've written before about how Walt Describes the curious act of performing with no audience. This equation come in context from his handouts for gesture drawing:

(Here, Walt is talking about the benefits of staying healthy enough to have the energy to create, another juicy point)

You must create. The injunction of life is to create or perish. Good physical and mental conditioning are necessary to do this. Remember this: the creative energy that created the Universe, created you and its creative power is in you now, motivating you, urging you on - always in the direction of creative expression. I have a formula: "Impression minus expression equals depression". This is especially applicable to artists. We have trained ourselves to be impressed (aware) of all the things around us, and in the natural course of our lives those impressions cry out to be expressed - on paper, on canvas, in music, in poetry, in an animated film. So shape up.

Ain't that the truth?

It's still worth the reminder. When you do professionally creative work (like animation or software!) you are still constantly taking in the world and are falling in love with all sorts of things - books, music, stories, and technologies.

The best way to express that love is through getting down to business and making something.

Sketches

After finishing my last sketchbook, I'm on to the next one!

Here are some of my favorite pages from this week:

I'm also going through Andrew Loomis' "Fun With A Pencil". An older style of caricature, but it's been fun to get comfortable with construction! Here are some head potatoes from my first studies:

Debussy - Rêverie

💭

Little snippet from the gorgeous Debussy piece.

Which was — fun fact — played in the final Anthony Hopkins scene in West World Season 1! Alongside a Radiohead string quartet cover, no less!

Terry Pratchett and Real Life Inspirations

It never really occurred to me growing up that I could work in software.

I grew up playing video games and when I finished them, credits would roll. A flood of names would dash across the screen.

My parents explained to me after watching a movie that it took all these people to make a film. And there was a realness to that.

But, with video games - growing up with Nintendo, all the names were Japanese. And developers - unlike firefighters, police officers, and construction workers - don't have as clear an answer to Richard Scarry's question "What do people do all day?". As far as I know - "Well wow, you have to be Japanese to make games!!"

It wasn't until I met living, breathing people that were making a career of working on the web that it clicked. And that's even after I had done it as a hobby since I was a kid!

Pratchett and Inspiration

I mentioned I'm reading Terry Pratchett's biography. I even wrote a bit about how he gains great satisfaction from the cycle of being a reader and writing for readers.

To continue the conversation - sharing things online helps a great deal. Here's further validation. This time, from the angle of the importance of having heroes that feel real.

Pratchett talks about writing fan mail to Tolkien in his biography, but I like this heartfelt clip from From A Slip of the Keyboard: Collected Non-Fiction :

[W]hen I was young I wrote a letter to J.R.R. Tolkien, just as he was becoming extravagantly famous. I think the book that impressed me was Smith of Wootton Major. Mine must have been among hundreds or thousands of letters he received every week. I got a reply. It might have been dictated. For all I know, it might have been typed to a format. But it was signed. He must have had a sackful of letters from every commune and university in the world, written by people whose children are now grown-up and trying to make a normal life while being named Galadriel or Moonchild. It wasn’t as if I’d said a lot. There were no numbered questions. I just said that I’d enjoyed the book very much. And he said thank you. For a moment, it achieved the most basic and treasured of human communications: you are real, and therefore so am I.

Just like my last post, I can't help but think about the internet. How ridiculously lucky are we to have much closer access to artists, writers, developers — all world class?

Close To Home

It's the humanizing element that can solidify the inspiration, though. It's one thing to hear stories of born prodigies, and another to hear stories of people that started from a place closer to home.

Granny Pratchett told young Terry growing up about G. K. Chesterton. She loaned him Chesterton's books, which he ate up and quickly became a fan. Most importantly, though, she told Terry that Chesterton was a former resident of their town Beaconsfield.

[W]hat seemed to have grabbed Terry most firmly in these revelations was the way they located this famous writer on entirely familiar ground – in Beaconsfield, at the same train station where Terry had but recently laid cons on the tracks for passing trains to crush... It brought with it the realization that, for all that one might very easily form grander ideas about them, authors were flesh and blood and moved among us... And if that were the case, then perhaps it wasn't so outlandish, in the long run, to believe that one might oneself even become an author.

I think we all have stories like this. Some of us may even be luckier - our teachers were this living, breathing validation that what you dream of doing can be done by you.

A similar sentiment from my last post: It's wildly important for you to share your work online. Maybe, even, it's just as important that you do the work you're called to do period. Even if you're not on as global a scale as a published author. For many of us, it's the section-mates in band, the english teachers, the supervisor at work, or even the friends we know from growing up that help solidify dreams into genuine possibilities.

Not to mention the impact you could have, just by sending an email.