Chris Padilla/Blog

My passion project! Posts spanning music, art, software, books, and more. Equal parts journal, sketchbook, mixtape, dev diary, and commonplace book.

- I backup my computer to a Time machine on a WD My Passport drive.

- My computer is backed up remotely to Backblaze

- On a WD Passport I have old files, videos, and photos from older computers that aren't

- Those older files are also synced with Backblaze.

- SQL databases are Relational Databases

- Other database structures are Non-Relational, or NoSQL Databases

- User

- Items

- Orders

- We start with the user ID

- We query an order based on the user

- We query items based on what's contained in the order

- We get massage the data to show what's relevant to our query.

- Open my repo in VS Code

- Load in any environment variables

- Start the local server

- Open up chrome

- Visit localhost:3000

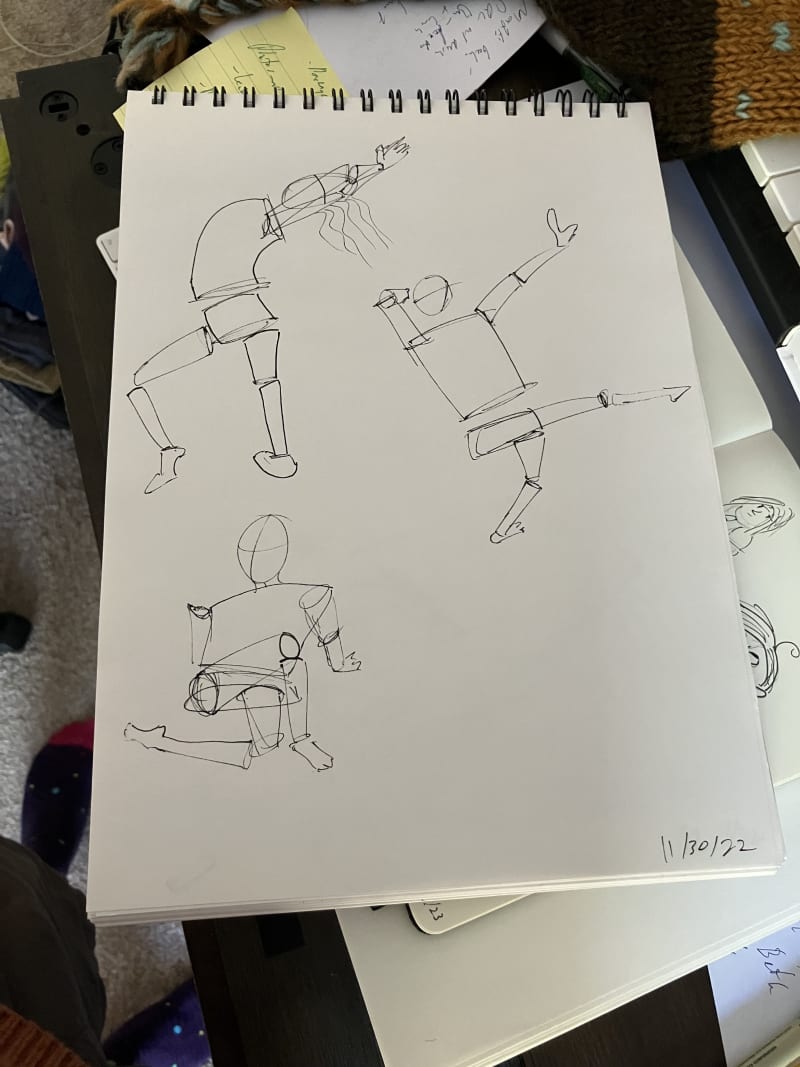

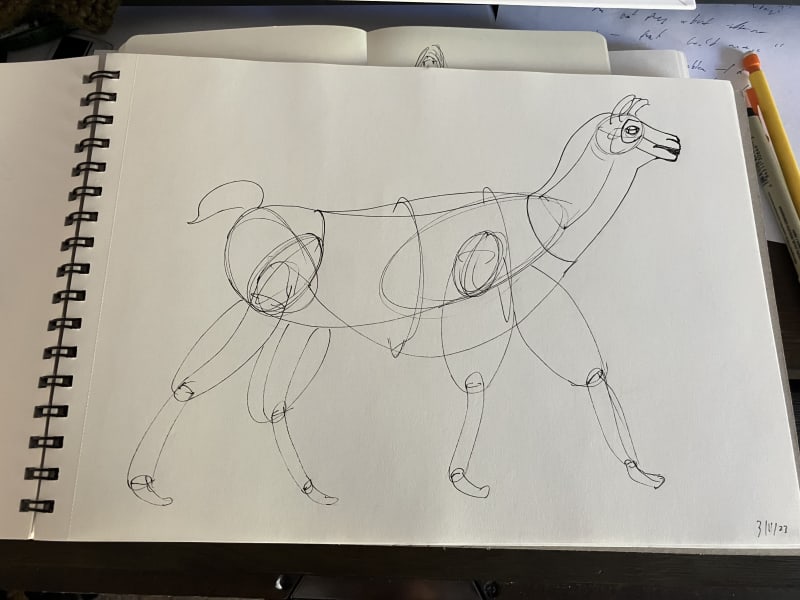

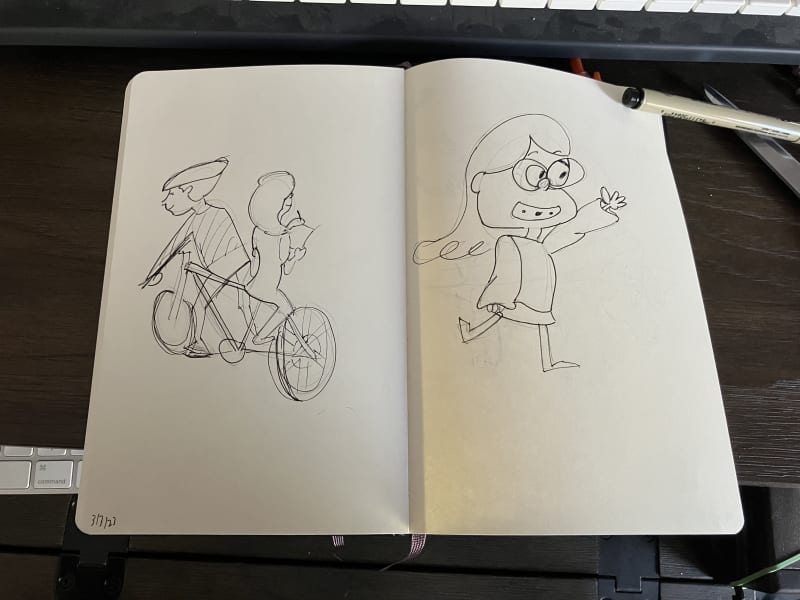

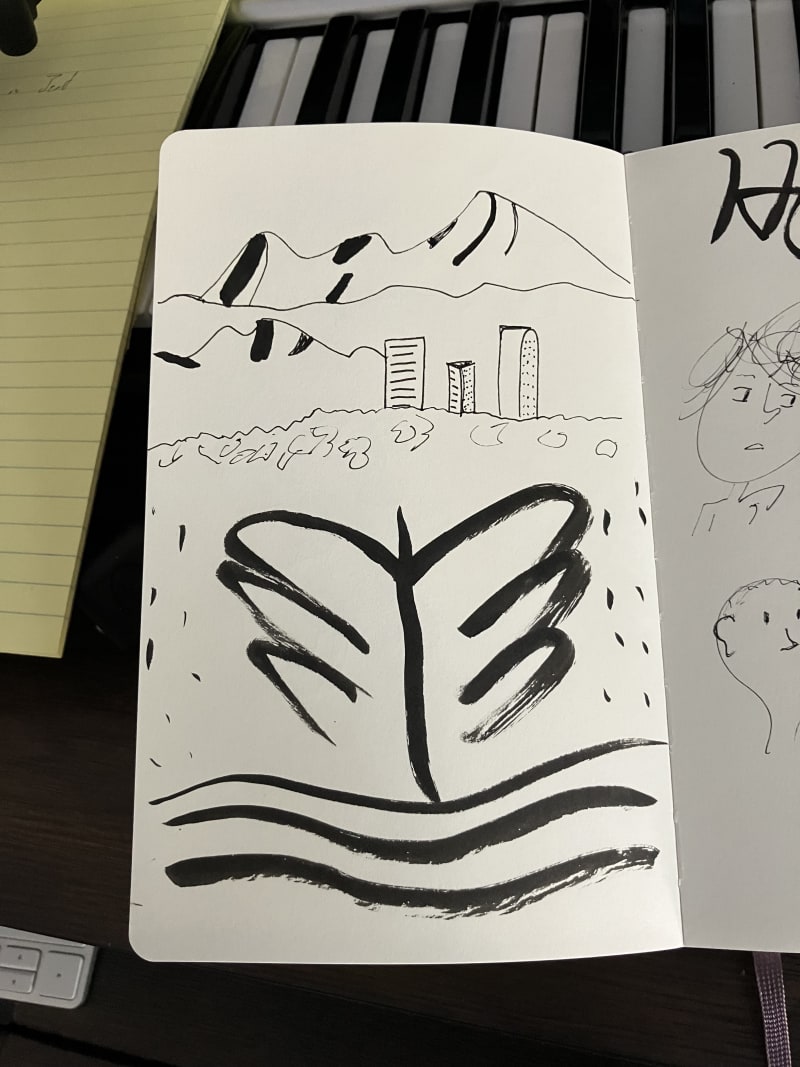

Finishing Sketchbook No. 3

Another one down!! This one I picked up so I could have more room to explore compared to my little moleskin.

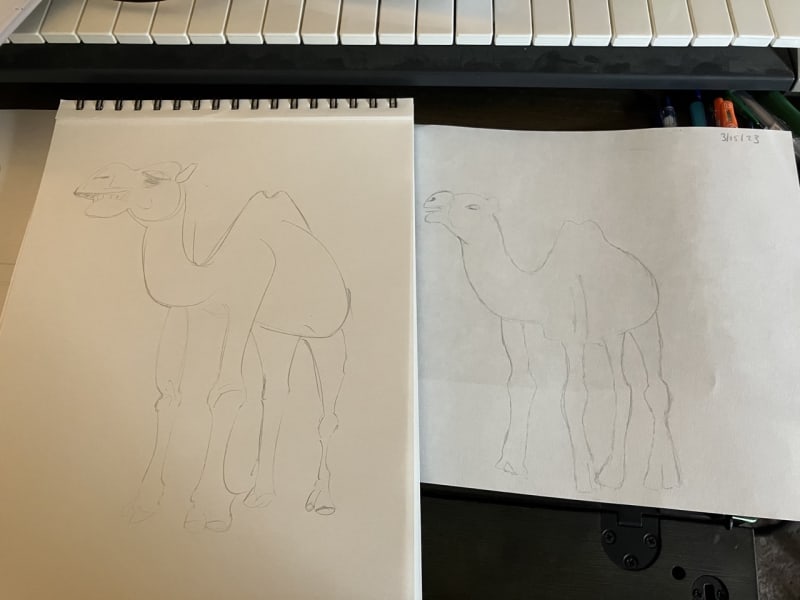

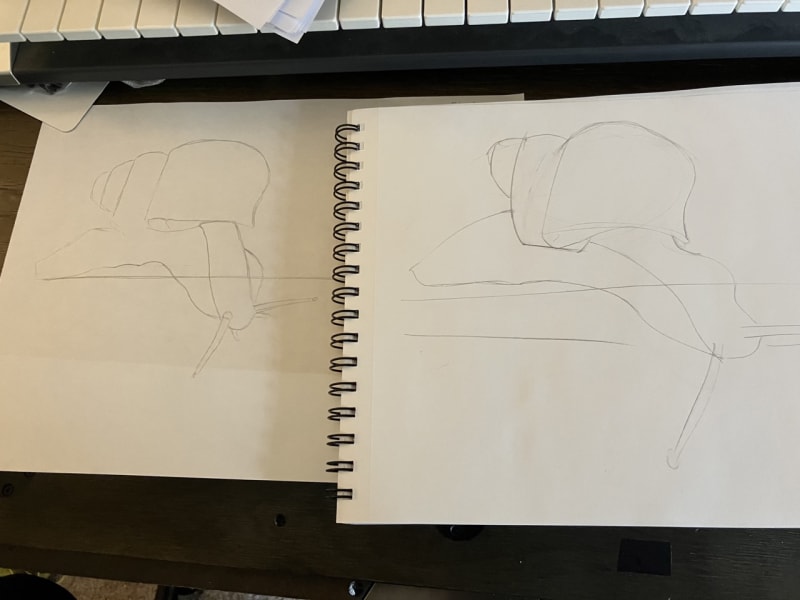

It started out with figure drawing dissections:

And ended with animal construction studies:

Bach - Prelude in C

So dreamy...

I was visiting UNT recently for old times sake and made it into a practice room with a baby grand!! So much fun!

Did I help someone today?

"Did I help someone today?"

Let me borrow from Simon Sinek's Golden Circle Model for a second: Why, how, and what.

At the end of the day, the bottom line is no small line in business. KPI's are a target to keep an eye on. Performance metrics and weekly outputs are something to continue monitoring. The "what" matters.

In the trenches, tools do matter. The systems we chose to implement, the ways we refactor our code, the time spent experimenting with a new technology. The "how" matters.

What matters most is certainly the "why" of helping someone.

Everything else almost feels like bike shedding when the question "Did I help someone today?" is the main guide.

Software is pretty expansive when it comes to this question, too!

Some days, that answer is "I helped a coworker debug a problem that's been slowing them down for weeks!" A deep and impactful day!

Some days, it's "I released a feature that will be used by thousands of people!" A broad impact sort of day.

The nice thing is, even on "low productivity days," we can answer affirmatively to that question in some dimension, if we're genuine.

New Album — Whiteout ❄️

Creative Insights from Miyamoto and Game Composers

Dave Rupert shared last year this amazing resource of translated video game magazines — Schmuplations.

Dave's post highlights some great connections between game development and Miyamoto's advice for success in the game industry. I did more digging and also enjoyed this Miyamoto quote from the same article:

What advice do you have for aspiring game designers?

No matter what your creative field, you should try to find a job that offers you many chances to realize own potential.

Before that, though, I think it’s important to refine your own sensibilities. 10 years from now, games will have changed. It won’t just be the same style of games you see today. If all you do is mimic what exists now, it will be difficult for you to create anything in the future.

I know it’s a cliche, but I think aspiring designers should follow where their curiosity leads, and try to accumulate as many different experiences as possible.

Another vote for following curiosity. Video games were (and still are?!) a young medium, so it's practically a necessity to be looking outside of games for inspiration from the world. There's simply more out there.

I'm excited to keep digging through the crates on this site! Here are a few more of my favorite snippets, this time from Beep Magazine's Game Music Round Table Interview:

What’s the secret to success in the game industry?

I hope this doesn’t come off wrong, but I don’t really remember trying super hard. One day I looked up and noticed things were going pretty well. Of course, I’ve been very blessed to have talented people and teachers around me. So I would probably have to say I didn’t really try super hard to get where I am… it just happened naturally.

A vote for effortlessness. It's what's easy and natural that we end up excelling at, and so, opportunities open. A nice counter to the notion that all that's worthwhile is on the other side of hard work alone.

A couple more on breaking into games. I don't ultimately see myself in games, but it's fun to see how musicians made it in way back when!

What advice do you have for aspiring game designers?

At present there are two in-roads to working in game music. The first is to join a game development company. For that it’s helpful to go to a 4-year college, and all the better if it’s a music school. The other way would be to gain some notoriety as a composer first, perhaps in a rock band or something, but basically if you can build a reputation in the music industry as a player, composer, or arranger, you might end up getting commissioned for this sort of work. Whichever path you choose, having that can-do spirit of “I want to write music no matter what!!” is important. If you can carry that passion with you wherever you go, and sustain it, I think nothing will serve you better.

Just an interesting perspective that still feels true. Though, in the indie world these days, it helps to have another skill to bring to the table: art, development, story writing, etc.

Here's that counter point from Mieko Ishikawa, composer for the Ys series:

For those who want to write video game music, I think knowing a bit of computer programming is a big advantage. There’s lots of people out there who can write songs, but if that’s your only talent, I think it will be rough-going in this industry.

Seems like good advice for creative work in general. Go all in on trying to make it on your art alone, and then pick up auxiliary skills to sustain your creative work.

Music Teachers Expand Dreams

Having been a teacher and, generally, someone who places a high value on exploring the world through learning, I end up reading a few books on education.

I'm only just coming across Seth Godin's Stop Stealing Dreams, a manifesto on education. It also happens to be about the values in our society, but education is the driver.

The illustrated version is beautifully done. Here are my favorite points from first glance:

33. Who Will Teach Bravery? The essence of the connection revolution is that it rewards those who connect, stand out, and take what feels like a chance. Can risk-taking be taught? Of course it can. It gets taught by mentors, by parents, by great music teachers, and by life.

44. Defining The Role of A Teacher. Teaching is no longer about delivering facts that are unavailable in any other format... What we do need is someone to persuade us that we want to learn those things, to push us or encourage us or create a space where want to learn to do them better.

56. 1000 Hours. Over the last three years, Jeremy Gleigh... has devoted precisely an hour a day to learning something new and unassigned...This is a rare choice...Someone actually choosing to become a polymath, signing himself up to get a little smarter on a new topic every single day... The only barrier to learning for most young adults in the developed world is now merely the decision to learn.

If you've been in music, likely you've had an instructor teach bravery. Sometimes it's the director that encourages you to give it one more try. Or the drum tech that's not giving up on a section of percussionists struggling with a tricky passage. Or the private teacher that so wholly embodies their instrument and the musicianship of a piece. So much so that it's impossible to resist the allure of striving for that sense of awe and wonder in our own musicianship.

Those teachers have also created environments where students can be fully immersed in a world of music, no matter how big or small. It's what makes classroom band more special in my mind than solely taking private lessons - you can't beat a thriving community of learners where you're playing a unique role in the musical process. Every band needs a kid on tuba and another on flute playing together.

A somewhat disappointing truth is that many students that graduate high school, having played an instrument for 7 years at that point, don't pick it up again. I think there's a missed opportunity - leading with greater creativity and exploration in the classroom. I'm sure Seth explores those ideas in the book as well.

On the other hand, though, it's still one of the best vehicles for learning resourcefulness that we have. To the last point I've quoted - all the musicians I know have absolutely no problem applying creativity, persistence, and bravery in their endeavors beyond the instrument. I have musician friends who are also photographers, who knit, work with aquaponics, code for fun, mountain climb, and write novels. Many of my colleagues are now master teachers. A significant number of them also have skillfully transitioned into roles in tech, finance, health, marketing, entrepreneurship, and sales.

The vision of the once young musician grows beyond music, beyond the self, beyond a local tribe to a larger community and a greater opportunity for contribution and the wonderful lives that support that.

In that case, music education is doing exactly what I think it is uniquely best at: Expanding Dreams.

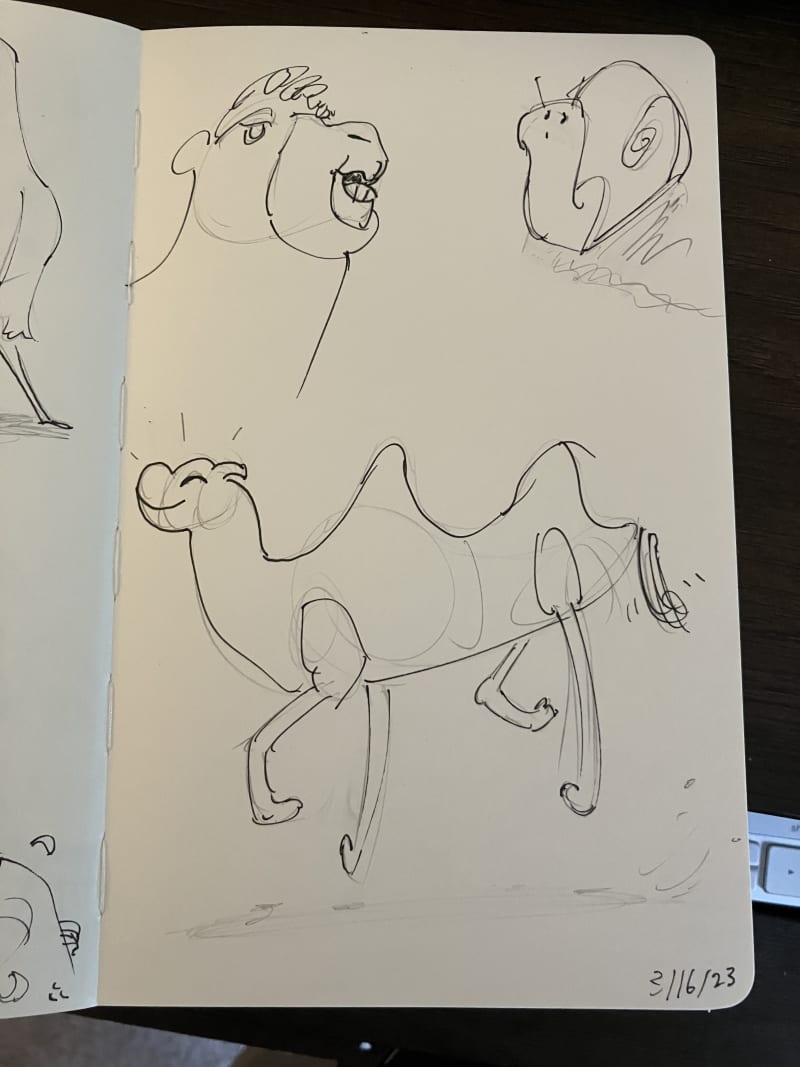

Camels and Snails

Alt Guitar Idea

Shaking that guitar and stretching my barre chords

Backing Up Data

Like flossing: really important to do, hard to get in the habit of.

But I've had too many tragic losses of data growing up. And now my entire life's work happens and is saved on my computer. So I've set up a system for myself!

I mostly follow Wes Bos' Backup Strategy. It keeps things easy and nearly automatic. Specifically:

Backblaze is great because it runs automatically in the background. It's not at all a resource hog.

It's only semi automatic because I still have a calendar reminder to actually plug in my drive every Friday morning. All of my ports are usually full with MIDI controllers and other gazmos. Ideally, I'd have some sort of monstrous port that takes in my monitor, midi instruments, guitar audio interface, AND USB to leave my external drive plugged in. Putting it on the wishlist!

Non-Relational Database is a Misleading Name

My intro to databases was through MongoDB. Coming from a web development perspective, it was comfy! Documents look and work like JSON objects. They're flexible and can adapt to changing schemas. I've enjoyed it!

I'm dipping SQL this week to broaden my horizons in data land. The first conceptual overview I got was this:

So are relationship structures exclusive to SQL?

SQL

The "relational" distinction comes from the fact that data is stored within tables in SQL.

My e-commerce app would divide its data into three tables:

Each table for each would have data pertaining specifically to that category of data. They'd then be linked through a relationship.

Orders, for example, would have a many-to-many relationship with items. Many items can be in many orders. Many orders can have many items.

Users could have a many-to-one relationship with Orders. A user can have many orders, but an order is tied to only one user.

So when your querying the items in the user's order, SQL has to travel through a few relationships:

And thus, SQL gets the relationship monicker.

MongoDB

What made this confusing at first is that MongoDB falls under the non-relational database category, but I can make these same queries in MongoDB as well.

MDN has a terrific guide to Express and Databases. When setting up the DB schema for MongoDB, it's done with a relational structure. In their library example, there are schemas developed for books, authors, book instances, and genre. All of which are tied together through relationships. In mongo, the ID is used to connect these documents.

So the NoSQL descriptor is a more accurate lead. The query language is different, but relationships are still an offering in Mongo.

The non-relational categorizing, though, is somewhat fair: The main benefit of Mongo is that you can nest in one document what you would otherwise split between tables.

A user document can store their orders within a nested object in MongoDB. Depending on your application, you may not be accessing orders outside of the context of a query to the user. Mongo has a clear healthcare example where patient information is stored in their document

Creativity as Conversation

Matthew Hinsley's Form & Essence quotes William Stafford's "A Way of Writing" —

A writer is not so much someone who has something to say as he is someone who has found a process that will bring about new things he would not have thought of if he had not started to say them. That is, he does not draw on a reservoir; instead, he engages in an activity that brings to him a whole succession of unforeseen stories...For the person who follows with trust and forgiveness what occurs to him, the world remains always ready and deep, an inexhaustible environment, with the combined vividness of an actuality and flexibility of a dream. Working back and forth between experience and thought, writers have more than space and time can offer. They have the whole unexplored realm of human vision.

There is no certainty when you sit down to write. That's the magic! It's simultaneously a daunting aspect of the process as much as it is the whole spiritual point of doing it in the first place!

While explaining the importance of letting go of certainty in creative process, Matt shares this:

Have you had a good conversation recently? Did you say something interesting, profound even?...If you did, I can almost guarantee that you were accessing your creative self. And I will also suggest that there's no way you could have given that advice, in that way, had you not been having that conversation. It was in the act of conversing, or reacting to energy and idea, that you put thoughts and experiences swirling in your subconscious together into words that became concrete statements in Form as they exited your mouth.

An anecdote to the blank page then is to make the creative process something other than closing the door, turning off the lights, and staring at a blank page. But instead, a conversation.

I've been in a rut with writing music. I haven't been brining reference into my practice. I've been sitting at the keyboard and simply waiting for what comes out. While there's an intuition that's part of the process, the energy is muted when starting this way.

But nothing kick-starts writing music better than listening to something I love, and then wanting to play around with it in my own way. In the same way that visual artists aren't cheating when they draw from reference, it's quite the opposite - the music is more buoyant and fun when it's in conversation with other recordings and musicians.

This conversation-style of creating is baked into the culture of blogging! Much of blogging is finding something you read that was interesting, then adding your own two cents while connecting it to a particular audience. You're adding your own story to the mix, telling the original author's tale in a way that adds a new dimension, compounding it's message, and creating a new audience for the source material.

Creatively conversing turns wonder into art.

Starting a Development Environment from the Command Line

I have a need for speed when developing. That goes for starting up all of my development applications.

Here's what needs to happen before I start coding:

A short list. But it takes a few key strokes and mouse clicks to get there. I think I can do better!

Linux Alias Commands are the answer.

I have a few set up for a few different use cases. Here's the one the runs the recipe for the steps above:

alias cap="cd /Users/cpadilla/code/my-repo && code . && open http://localhost:3000 && source settings.sh && npm run devI'm adding this to my .zshrc file at the root of my account.

That turns all five steps into one:

$ capAnother use case: I keep a list of todos in markdown on my computer. Files are named by the current date. I could do it by hand every time, but there's a better way:

alias dsmd="touch $(date +%F).md && open $(date +%F).md"

alias todo="cd /Users/cpadilla/Documents/Todos && dsmd"There we go! $(date +%F) is a command for delivering a specific date format. The todo alias navigates to the correct folder and then uses dsmd to create and open the file.

Small quality of life changes! They add up when you do the same commands every day. 🙂

Integration Testing with Redux

I'm testing a form on an e-commerce app. I've already looked at testing the components that add an item to the cart. Now I need to setup a test for updating an item in the cart.

My app leans on Redux to store the cart items locally. As per the React Testing Library Guiding Principles, I'm going to be asserting my app's functionality by checking what shows in the DOM against my expectations. Notably: I'm doing this instead of asserting the redux store state. I'll also be integrating a test store and provider in the mix.

The "how" today is Redux. The principle, though, is that if you're using a datastore, cache, or any other source, you want to be integrating it in your tests and not simply mocking it. That goes for either app state management or the data layer.

Mocking

In my app, to accomplish this, I have to render the whole page with the Layout component included:

const rendered = renderWithProviders(

<Layout

children={

<CartItemPage

item={chipsAndGuac}

itemID={'5feb9e4a351036315ff4588a'}

onSubmit={handleSubmit}

/>

}

/>,

{ store }

);For my app, that lead to mocking several packages. Here's the full list:

jest.mock('uuid', () => ({

v4: () => '',

}));

jest.mock('next/router', () => {

return {

useRouter: () => ({

query: {

CartItemID: '5feb9e4a351036315ff4588z',

},

push: () => {},

events: {

on: () => {},

off: () => {},

},

}),

};

});

jest.mock('@apollo/client', () => ({

useQuery: () => ({

data: { itemById: { ...chipsAndGuac } },

}),

useLazyQuery: () => ['', {}],

useMutation: () => ['', {}],

gql: () => '',

}));

afterEach(() => {

cleanup();

});

jest.mock(

'next/link',

() =>

({ children }) =>

children

);`I'm not testing any of the above, so I'm not sweating it too much. It did take some doing to get the right format for these, though.

Redux Testing Utilities

You'll notice that my render method was actually renderWithProviders. That's a custom utility method. The Redux docs outline how you can set this up in your own application here.

That's it! That's the magic sauce. 🥫✨

The philosophy behind it is this: You don't need to test Redux. However, you do want to include Redux in your test so that you have greater confidence in your test. It more closely matches your actual environment.

You can take it a step further with how you load your initial state. You could pass in a custom state to your initStore() call below. But, a more natural would be to fire dispatch calls with the values you're expecting from your user interactions.

Here I'm doing just that to load in my first cart item:

test('<CartItemPage />', async () => {

const formExpectedValue = {

cartItemId: '5feb9e4a351036315ff4588z',

id: '5feb9e4a351036315ff4588a',

image: '/guacamole-12.jpg',

name: 'Guacamole & Chips',

price: 200,

quantity: 1,

selectedOptions: {

spice: 'Medium',

},

};

const store = initStore();

store.dispatch({

type: 'ADD_TO_CART',

payload: {

...formExpectedValue,

},

});

. . .

}From there, you're set to write your test. That's all we need to do with Redux, from here we'll verify the update is happening as it should by reading the values in the DOM after I click "Save Order Changes."

The details here aren't as important as the principles, but here is the full test in action:

test('<CartItemPage />', async () => {

const formExpectedValue = {

cartItemId: '5feb9e4a351036315ff4588z',

id: '5feb9e4a351036315ff4588a',

image: '/guacamole-12.jpg',

name: 'Guacamole & Chips',

price: 200,

quantity: 1,

selectedOptions: {

spice: 'Medium',

},

};

const handleSubmit = jest.fn();

const store = initStore();

store.dispatch({

type: 'ADD_TO_CART',

payload: {

...formExpectedValue,

},

});

const rendered = renderWithProviders(

<Layout

children={

<CartItemPage

item={chipsAndGuac}

itemID={'5feb9e4a351036315ff4588a'}

onSubmit={handleSubmit}

/>

}

/>,

{ store }

);

const pageTitleElm = await rendered.findByTestId('item-header');

expect(pageTitleElm.innerHTML).toEqual('Guacamole & Chips');

const customizationSection = await rendered.findByTestId(

'customization-section'

);

const sectionText = customizationSection.querySelector(

'[data-testid="customization-heading"]'

).innerHTML;

expect(sectionText).toEqual(chipsAndGuac.customizations[0].title);

const spiceOptions = await rendered.findAllByTestId('option');

const firstOption = spiceOptions[0];

expect(!firstOption.className.includes('selected'));

fireEvent.click(firstOption);

const updateCartItemButtonElm = await rendered.findByTitle(

'Save Order Changes'

);

expect(firstOption.className.includes('selected'));

fireEvent.click(updateCartItemButtonElm);

const cartItemRows = await rendered.findAllByTestId('cart-item-row');

const firstItemElm = cartItemRows[0];

const firstItemTitle = firstItemElm.querySelector(

'[data-testid="cart-item-title"]'

);

const customizationElms = firstItemElm.querySelectorAll(

'[data-testid="cart-item-customization"]'

);

expect(firstItemTitle.innerHTML).toEqual('1 Guacamole & Chips');

const expectedCustomizations = ['Mild'];

expect(customizationElms.length).toEqual(expectedCustomizations.length);

customizationElms.forEach((customizationElm, i) => {

expect(customizationElm.innerHTML).toEqual(expectedCustomizations[i]);

});

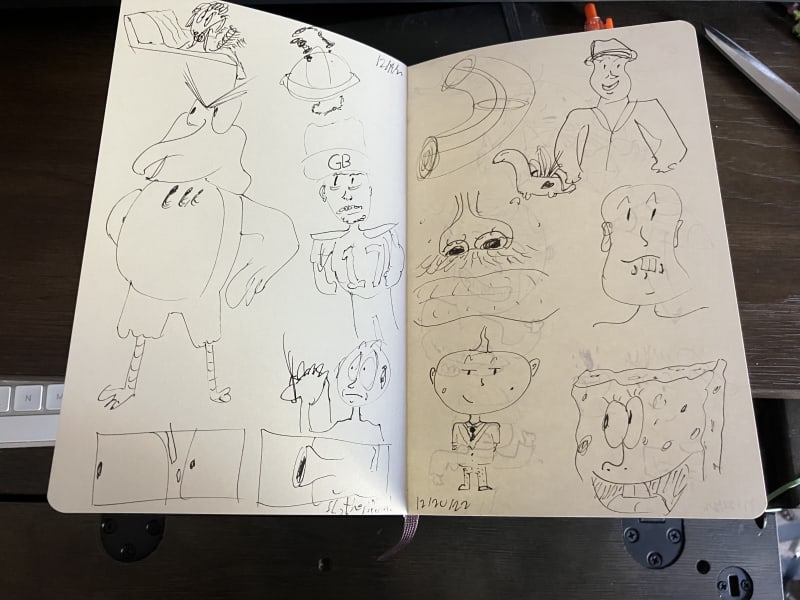

});Finishing Sketchbook No. 2

Another sketchbook down!!

This go-round, I alternated pages with two kinds of drawing. One page of drawing from imagination (visual journaling, as I've thought of it.) The other pages studies, largely older pieces by Louie Zong. What can I say, I love his work!

Here's where I started:

And where I'm leaving this book:

The left page is a study on this painting by Louie Zong.

Big change!! I'm taking less time to get many ideas out at once, and am trying more to methodically get out a detailed character on the page. That tracks for this book!

Here's my favorite page, following Miranda and I's trip to Denver:

On to the next one!

Faber - Chanson

Pretty little tune. ✨

According to these books, I'm about to go from a level four pianist to a level five! 💪😂